OSS LLM Model Name Codes Explained

Hello Coders! 👾

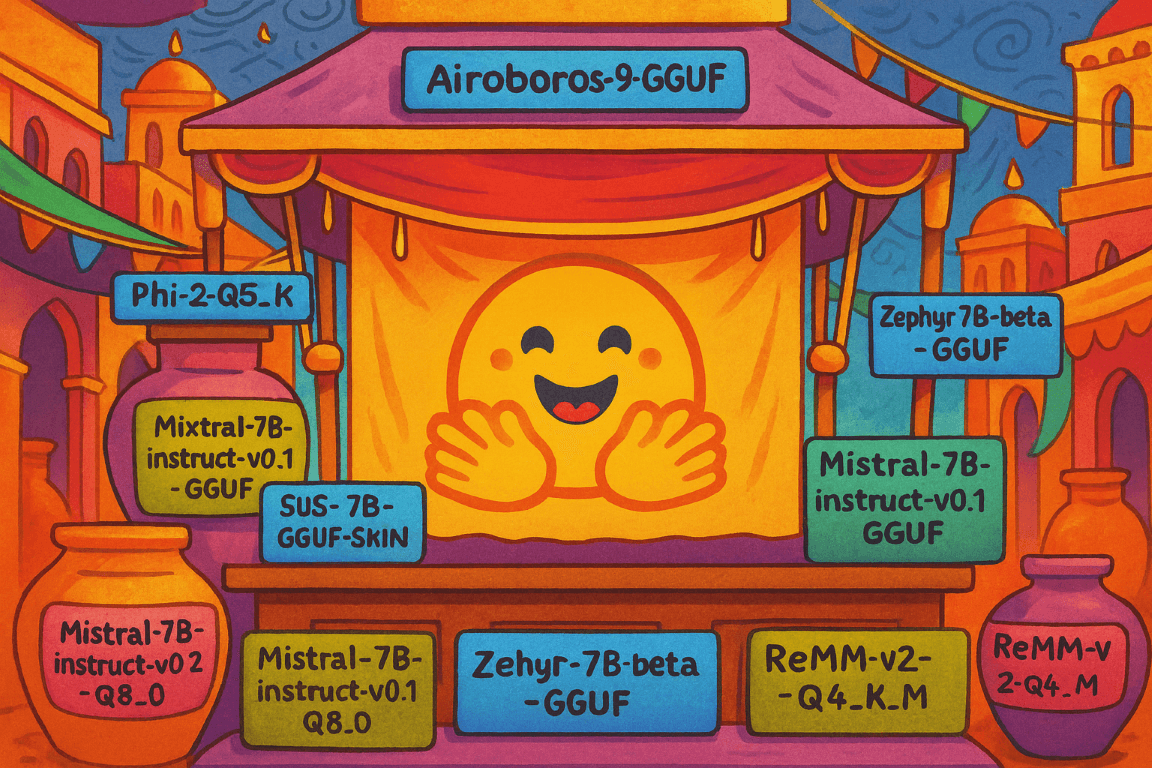

If you’ve ever looked into working with open-source LLMs in tools like

LM Studio

, then you’ve probably seen model names like Qwen3-14B-Claude-Sonnet-4.5-Reasoning-Distill-GGUF or Llama-3.1-8B-GPT-4-CodeInstruct-bnb-4bit. At first you notice the well-known names in there, like ‘Claude-Sonnet’ or ‘GPT-4’, but then these names start to look like almost random words, codes and numbers. But they actually follow a logical structure that reveals a lot about the model’s architecture, training method, and intended use. To make it a bit easier to remember this myself I wrote it down in this blogpost.

Base Model

This is the foundation, the original pretrained checkpoint. I see it as the “raw” model after it learns general language patterns (usually by predicting the next token), but before it’s shaped into a helpful chat assistant. So if you prompt a base model like a chatbot, it can still respond, but it might not follow instructions reliably.

In open-source, this base checkpoint is the thing people build on top of: you’ll often get an -Instruct or -Chat variant fine-tuned from it, plus community fine-tunes for code, roleplay, or a specific domain. And even when the weights are the same, you’ll see the base repackaged in different formats or quantizations to make it run locally.

For example:

Qwen3-14Bmeans Qwen model family version 3, with 14 billion parameters.Llama-3.1-8Bmeans Meta’s Llama version 3.1, with 8 billion parameters.Nemotron-8Bmeans Nemotron model with 8 billion parameters.GPT-OSS-20Bmeans an open-source GPT model with 20 billion parameters.

Teacher / Distillation Target

This tells you which larger model the “student” is trying to copy. So this does not mean you are running Claude/GPT/etc on your machine, though it might look like it because of the name of the model. What usually happens is that someone takes a base model (like Qwen or Llama), generates a big pile of answers with a stronger “teacher” model, and then fine-tunes the smaller model on those input-output pairs so it learns a similar writing style, formatting, and problem solving behavior.

So Qwen3-14B-Claude-Sonnet-4.5-... typically means that the Qwen-based model was trained to imitate Claude Sonnet-like outputs. At runtime in LM Studio you only load the student model file, the teacher is not involved anymore. Also worth saying, these teacher tags are sometimes a bit loose or marketing-ish, so it’s a hint about the goal, not a guarantee of an official connection.

Training Method / Specialization

This part tells you what they did on top of the base model to push it into a certain behavior. I read it as: “what kind of fine-tuning or extra training made this model different from the plain base checkpoint?”. These tags can also stack, so a model can be both distilled and instruction-tuned, or aimed at reasoning and code at the same time.

- Examples:

Reasoning-Distill,Opus-Distill-i1,StoryWriter,CodeInstruct - Meaning:

- Distill: trained on outputs from a stronger teacher model, so the smaller model learns to respond in a similar way.

- Reasoning: tuned with data that rewards multi-step problem solving (math, logic, structured reasoning).

- StoryWriter: tuned for long-form writing with a more narrative style and better consistency over many paragraphs.

- Instruct: tuned to follow prompts more reliably (so it behaves more like a chat assistant instead of raw text completion).

- CodeInstruct: like instruct tuning, but with lots of programming tasks (write code, explain code, fix bugs, follow coding constraints).

Dataset

This is where it often gets very “Hugging Face-ish”. I read this part as: “what data did they use to fine-tune/distill this model?”. Sometimes it’s a public dataset repo name, sometimes it’s a private dataset and you only get a hint in the model name.

Important detail: you don’t necessarily need this dataset to run the model. It’s mainly useful to understand why the model behaves the way it does (more reasoning, more code, more chat style, etc.).

- Example:

TeichAI/claude-sonnet-4.5-high-reasoning-250x - Meaning: The dataset (or dataset repo) used during training. In this example it’s a community dataset aimed at “high reasoning” style outputs.

Quantization / Format

This part is about how the model is stored and shipped so it can run on your hardware. I usually split it in my head like this: format (how the file is packaged) and quantization (how much the weights are compressed).

Quantization is basically a trade: smaller model files and lower RAM/VRAM usage, in exchange for a bit of quality loss (sometimes you won’t notice it, sometimes you do).

- Examples:

GGUF,bnb-4bit,Q4_K_M - Meaning:

- GGUF: file format commonly used for local inference (llama.cpp, LM Studio). GGUF models are often already quantized, and the exact quant level is usually included in the filename too.

- bnb-4bit: weights stored in 4-bit using bitsandbytes, mostly used in PyTorch-based runtimes.

- Q4_K_M: a specific quantization preset (4-bit) that tries to balance speed, size, and quality.

- Impact: smaller downloads and easier local running, but slightly reduced accuracy compared to full precision.

Extra Tags

These are the “everything else” labels. I treat them as small hints about the release: versioning, architecture tweaks, or intended workflow. They’re not standardized, so two uploaders can use different words for the same idea.

i1→ iteration/version of the distillation or fine-tune run.Orchestrator→ tuned to route tasks or coordinate other tools/models (more agent-like behavior).Hybrid→ usually means some mix of approaches (for example dense + MoE), depending on what the author describes.

Example Breakdown

If I see this:

Qwen3-14B-Claude-Sonnet-4.5-Reasoning-Distill-GGUF

Then I read it like:

- Base model:

Qwen3-14B(that’s the actual foundation and size) - Teacher:

Claude-Sonnet-4.5(training target style, not what you run locally) - Method:

Reasoning-Distill(distilled with a reasoning-focused dataset) - Format:

GGUF(packaged for local inference, typically with some quantization involved)

Takeaway

When I’m scanning model names, this is my quick checklist:

- Base model = what it started as (family + size)

- Teacher = what it tried to imitate during training (if any)

- Method = what kind of fine-tune/distill shaped the behavior

- Dataset = what data pushed it in that direction

- Quantization / format = how it’s packaged so it can run on my machine

Hopefully this breakdown helps a bit with understanding the cryptic model names! Once you get the hang of it, you can quickly understand what a model is all about just from its filename.

Happy Coding! 🚀