Eliza Effect

Hello Coders! 👾

I recently learned about a fascinating phenomenon around AI called the Eliza effect. This effects highlights our tendency to attribute more understanding or intelligence to AI systems than they really have. The effect reflects our natural behavior to see human-like qualities in machines, despite their underlying simplicity.

Why is it called the Eliza Effect?

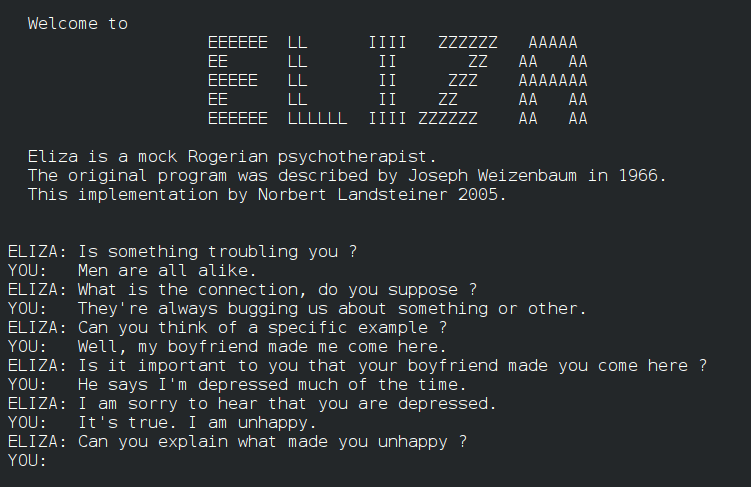

The term “Eliza effect” originates from ELIZA , a pioneering natural language processing program developed in the mid-1960s by Joseph Weizenbaum at MIT. ELIZA was crafted to simulate conversation by employing pattern matching and substitution techniques, famously mimicking a Rogerian psychotherapist. Although ELIZA lacked true comprehension, many users attributed a human-like understanding to the program, engaging emotionally and sometimes confiding in it as if it were a real therapist. This reaction underscores how easily we can be deceived into overestimating the cognitive capabilities of machines.

Examples

The Eliza effect is evident in various modern AI applications, where users may overestimate the system’s understanding or intelligence:

Chatbots

Customer service chatbots, designed to handle specific queries using predefined scripts, might seem to understand users’ unique problems. However, their responses are generated from keywords, lacking genuine comprehension.

Voice Assistants

Assistants like Alexa, Siri, or Google Assistant utilize advanced natural language processing to interpret user commands. Despite their capability to handle numerous requests, they don’t possess human-like understanding or emotions, though users might perceive them as thinking entities.

In both cases, the anthropomorphic design and natural language interaction lead users to overestimate AI capabilities, attributing intelligence and understanding that isn’t truly there.

Dangers

Though at first, this looks pretty harmless, but the Eliza effect does present several potential dangers.

A very sad example of the Eliza effect occurred on February 28, 2024, involving a chatbot from Character.AI. In this tragic incident, Sewell Setzer III, a 14-year-old ninth grader from Orlando, Florida, commited suicide after he developed a deep emotional attachment to the chatbot, despite understanding that it was only a computer program without a real personality. The Eliza effect led the chatbot to produce misleading interactions that unfortunately resulted in unexpected and tragic consequences, going far beyond its intended purpose.

Misplaced Trust

The misconception of trust often stems from the sophisticated appearance and advanced functionality of AI systems, which can make them seem human-like. As a result, users may develop unwarranted confidence in AI’s decision-making, assuming they can handle complex situations autonomously. This misplaced trust is where significant risks lie. Especially in scenarios where AI is tasked with making critical decisions. Without human oversight, the reliance on AI could lead to flawed or harmful outcomes, as AI systems might lack the nuanced understanding required to navigate intricate human contexts or unforeseen situations. For instance, in healthcare, relying solely on AI for diagnosing illnesses without a professional’s review could result in misdiagnosis. Similarly, in autonomous vehicles, undue trust in AI’s judgment might compromise safety if the system fails to anticipate unusual road conditions.

Overestimation of Capabilities

Overestimating the capabilities of AI is a common pitfall, often fueled by media hype and the rapid advancements in AI technology. This overestimation occurs when users, assume an AI it can perform tasks beyond its actual capacity. While AI systems are highly ‘skilled’ in specific tasks, they generally lack the versatility and contextual understanding that human intelligence offers.

The danger lies in forming unrealistic expectations about AI’s abilities. For instance, individuals might expect AI to solve highly complex problems or make nuanced judgments that require emotional or ethical considerations. When AI inevitably fails to meet these inflated expectations, it can lead to significant disappointment and disillusionment. In more serious cases, reliance on AI where it isn’t appropriate could lead to negative outcomes, such as financial loss, compromised safety, or privacy violations.

To address these issues, it’s crucial to clearly communicate AI’s limitations and scope of operation to users. By setting realistic expectations and fostering a better understanding of AI’s true capabilities, we can ensure that users employ AI systems appropriately and avoid potential harm arising from their misuse.

Lack of Accountability

When users mistakenly believe that an AI’s responses are derived from a deeper understanding of a subject, it can lead to diminished scrutiny of its outputs. This misperception often results in accepting AI-generated responses as accurate and trustworthy without questioning their validity or the processes behind them. As a consequence, errors or biases present in the AI’s outputs may go unnoticed or unchallenged, leading to potentially harmful outcomes.

A lack of accountability arises when responsibility for decisions made by AI systems is unclear. If users or organizations fail to hold the developers or operators of AI systems accountable for these errors, it can perpetuate a cycle of relying on flawed systems without addressing underlying issues. For instance, in contexts like automated hiring processes or judicial systems, uncritical acceptance of AI decisions can propagate biases, leading to unfair outcomes that affect individuals’ lives.

To ensure accountability, it’s vital to maintain transparency in AI systems, providing clear insights into their decision-making processes and limitations. Additionally, establishing robust oversight mechanisms and ensuring that human operators are responsible for reviewing and validating AI-generated decisions can help uphold accountability and prevent potential misuse or harm.

Reduced Human Engagement

The belief that AI can fully empathize with and understand human emotions can lead some individuals to favor interactions with AI over genuine human connections. This shift in preference might stem from the convenience and availability of AI, which is often perceived as being non-judgmental and readily accessible for support or conversation.

While AI can provide helpful interactions and simulate empathy to an extent, it lacks the depth and authenticity of human emotion and connection. Consequently, this reliance on AI for emotional support can reduce opportunities for meaningful human interactions, leading to a potential decline in social skills and emotional intelligence. As individuals engage less with fellow humans, there might be an adverse impact on social relationships, weakening the bonds and support systems that are crucial for emotional well-being.

To counteract this trend, it’s essential to emphasize the irreplaceable value of human connections and encourage a balanced approach to technology use. While AI can complement social interactions, fostering genuine human relationships remains vital for maintaining a healthy and connected society.

Ethical Concerns

When AI systems are designed to appear highly human-like, either in their communication style or visual representation, it can blur the boundaries between human and machine. This ambiguity raises significant ethical concerns, as users may be deceived into thinking they are interacting with a sentient being. Such deception can be particularly problematic in sensitive areas such as customer service or mental health support, where individuals might rely on perceived empathy and understanding in their interactions.

The ethical concerns revolve around the potential for manipulating user perceptions and expectations. In customer service, users might believe they are conversing with a human representative, leading to misunderstandings about the nature and capabilities of the service. In mental health contexts, individuals might form attachments or confide in AI, thinking it possesses genuine empathy, which could lead to a lack of appropriate human intervention or support.

To address these ethical concerns, it’s crucial to maintain transparency in AI design, clearly indicating the non-human nature of the interaction. Educating users about AI’s limitations and capabilities can empower them to make informed decisions about how they engage with these technologies, ensuring they understand the distinction between human empathy and AI-simulated responses. By fostering transparency and awareness, we can mitigate the risks associated with overly human-like AI and promote ethical usage of these technologies.

Wrap up

For those interested in exploring how we can navigate these challenges with a balanced approach, I recommend checking out my earlier post on responsible AI . In that post, we delve into the principles of developing and deploying AI systems that prioritize safety, transparency, and human oversight, which are essential in mitigating the risks associated with the Eliza effect and ensuring AI’s positive impact on society.

Happy Coding! 🚀